Threading & Multiprocessing

Comparing Multithreading vs. Multiprocessing in Python: CPU-bound vs. I/O-bound Tasks

When optimizing Python programs for performance, choosing between multithreading and multiprocessing is crucial. This post explores their differences in CPU-bound and I/O-bound workloads through experiments and data visualization.

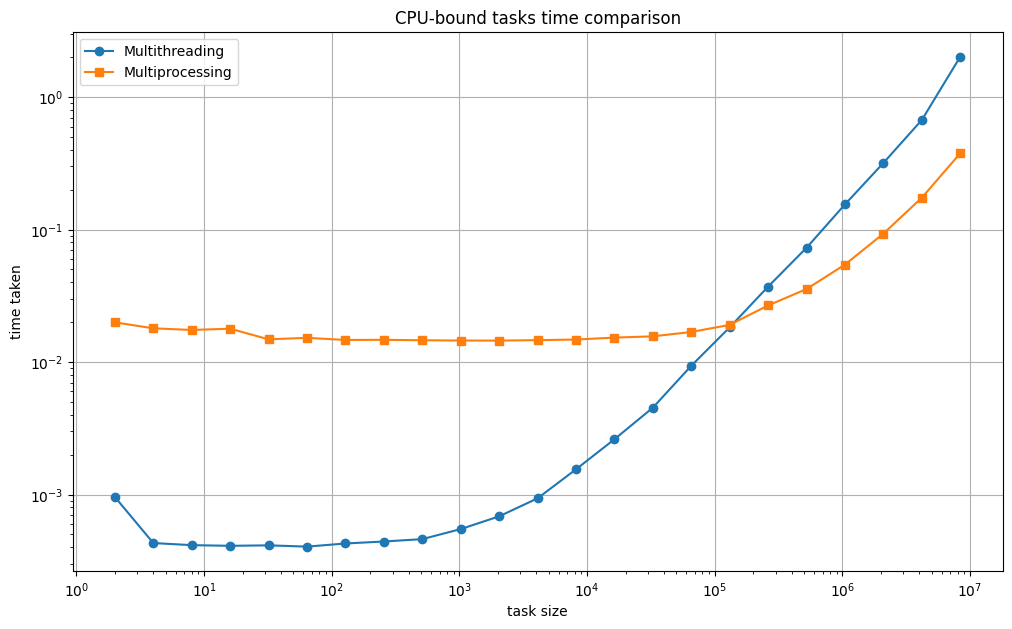

1. CPU-bound Tasks: Heavy Computation

Experiment Summary

I performed an experiment where multiple threads and processes executed a computationally intensive task (e.g., calculating prime numbers or matrix multiplications). The execution times were measured for increasing workload sizes.

Observations

- Threading struggled due to Python’s Global Interpreter Lock (GIL), which prevents multiple threads from executing Python bytecode in parallel but thrived in smaller tasks.

- Multiprocessing was significantly faster because it utilized multiple CPU cores, running separate processes in true parallel execution but was slower doing smaller tasks due to larger process-spawning overhead.

CPU-bound Execution Time Comparison

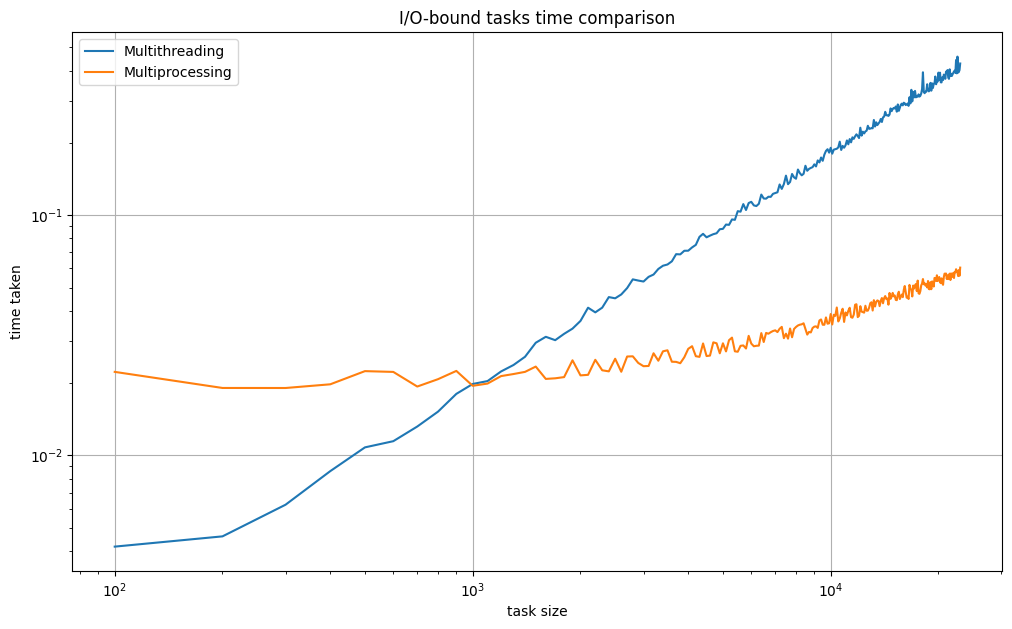

2. I/O-bound Tasks: File Read/Write

Experiment Summary

In this experiment, I simulated an I/O-heavy workload by reading and writing large files in parallel using both multithreading and multiprocessing.

Observations

- Multithreading was initially faster for smaller file sizes since it could interleave execution while waiting on disk operations.

- Multiprocessing eventually outperformed threading for larger I/O tasks, as separate processes handled file I/O independently.

- Process creation overhead made multiprocessing inefficient for small workloads.

I/O-bound Execution Time Comparison

3. But Why Choose Threading for I/O Bound Tasks?

Understanding "Interleaving"

The key reason threading works well for I/O-bound tasks is that these tasks spend a lot of time waiting (e.g., waiting for a file to be read from disk or a network request to complete).

For example:

- Thread A starts reading a file but must wait for the disk to send data.

- Instead of staying idle, Python switches to Thread B, which might be writing to a different file or reading another part of the disk.

- When the disk is ready, Thread A resumes while other threads keep working.

Since the CPU isn’t doing much (just waiting), this switching makes I/O-bound tasks faster by keeping the program busy instead of just waiting.

Why Doesn’t Multiprocessing Win for I/O?

Threads Share Memory, Processes Don’t

- Threads within the same process share memory and can quickly switch between I/O operations.

- Processes have separate memory spaces, so they don’t communicate as efficiently.

- When a process waits for I/O, it doesn’t automatically switch to another task unless explicitly managed.

Context Switching is Heavier for Processes

- The OS has to fully swap out a process (memory, registers, execution state) before switching to another.

- This is much slower than switching between threads, which are lighter and share the same execution space.

I/O Operations Are Often the Bottleneck Anyway

- In an I/O-bound task (like file read/write), the bottleneck is usually the disk speed or network latency—not the CPU.

- Since threading allows efficient switching between tasks without heavy process overhead, it wins.

When Would Multiprocessing Help in I/O?

- If each process handles completely separate files and avoids communication overhead.

- If I/O is mixed with heavy CPU work, where multiprocessing can handle CPU-bound parts in parallel.

So, for I/O tasks like file handling, web scraping, and database queries, threading is usually better than multiprocessing.

Final Takeaways

- Multiprocessing is best for CPU-heavy tasks, but it comes with higher memory and process creation overhead.

- Multithreading is great for I/O-heavy tasks, especially when waiting on external resources (disk, network).